Data health is a critical yet often misunderstood concept for modern data teams. Measuring it effectively ensures you can answer key questions like, Can I trust this dataset? Do we have sufficient test coverage? Are we making progress in improving data quality? This guide dives into the why, what, and how of data health measurement and demonstrates how Elementary leverages data health scores to elevate data observability.

We also recommend watching the 'Measuring Data Health' webinar recording.

Why Measure Data Health?

Every data team faces scenarios where trust in data is questioned. Imagine presenting stakeholders with assurances of data quality, only to face their skepticism due to recurring discrepancies or delays. Now imagine starting that same conversation with hard metrics: “Our marketing dataset’s quality score has improved from 90% to 95% this month.” Data health scores bring a data-driven approach to discussions about quality, fostering transparency and trust.

For practitioners, data health scores transform vague test results into actionable insights. Instead of just reporting failed tests, engineers can say, "Our marketing dataset has a completeness score of 92%. It’s reliable despite minor null values.” This structured, quantitative perspective fosters better collaboration and prioritization.

Lessons from the Community

Elementary’s approach to data health evolved from conversations with our community. Early in 2023, users began requesting tags like completeness and freshness for quality validation. As we explored this, it became clear that many teams were independently categorizing their data tests into broader dimensions of quality.

This aligned with the Quality Dimensions Framework, an industry standard that organizes data quality into six common dimensions:

- Accuracy: Does the data align with real-world facts or business rules?

- Validity: Does the data conform to expected formats and ranges?

- Uniqueness: Are there duplicates?

- Completeness: Are all required fields populated?

- Consistency: Is the data uniform across datasets and sources?

- Freshness: Is the data up to date?

By categorizing data tests into these dimensions, teams gain granular insights into where their quality efforts are succeeding—or falling short.

Examples of Quality Dimensions in Action

To illustrate, let’s use the example of IMDb datasets:

- Freshness: If IMDb’s rating for The Godfather hasn’t been updated since 2000, it misrepresents current sentiment.

- Completeness: Missing a key cast member like Uma Thurman in Pulp Fiction diminishes the dataset’s usefulness.

- Uniqueness: Duplicate records for The Matrix with different release years create downstream inconsistencies.

- Consistency: If IMDb’s “Top 250 Movies” list shows 254 entries, users lose trust in its accuracy.

- Validity: A movie runtime listed as 1,500 minutes (when the longest movie ever made is 873 minutes) would be invalid.

- Accuracy: Listing Leonardo DiCaprio as the director of Inception instead of Christopher Nolan reflects a factual inaccuracy.

Each dimension addresses a distinct aspect of data health, allowing for targeted validation and monitoring.

Calculating Data Health Scores

There are multiple ways to calculate quality dimension scores. Here are two simple methods:

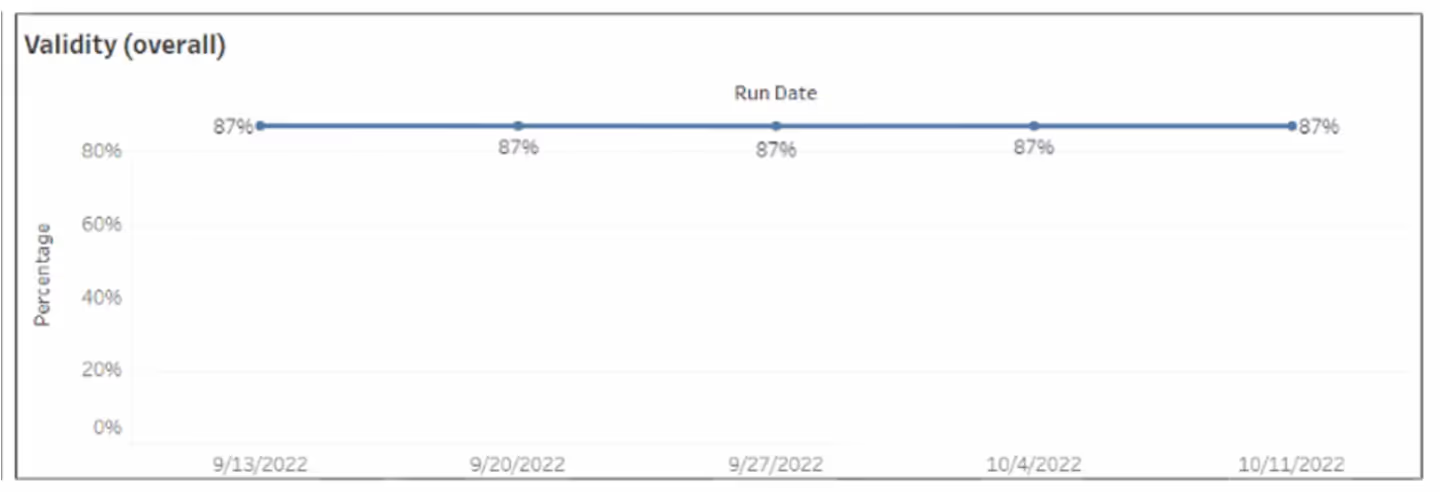

Based on Test Fail Rate

Each test receives a score based on its status:

- Passed: 1

- Warning: 0.5

- Failed: 0

Example: If four tests are run (three passed, one warning), the dimension score would be:

(3×1)+(1×0.5)+(0x0)÷ 4 = 87.5%

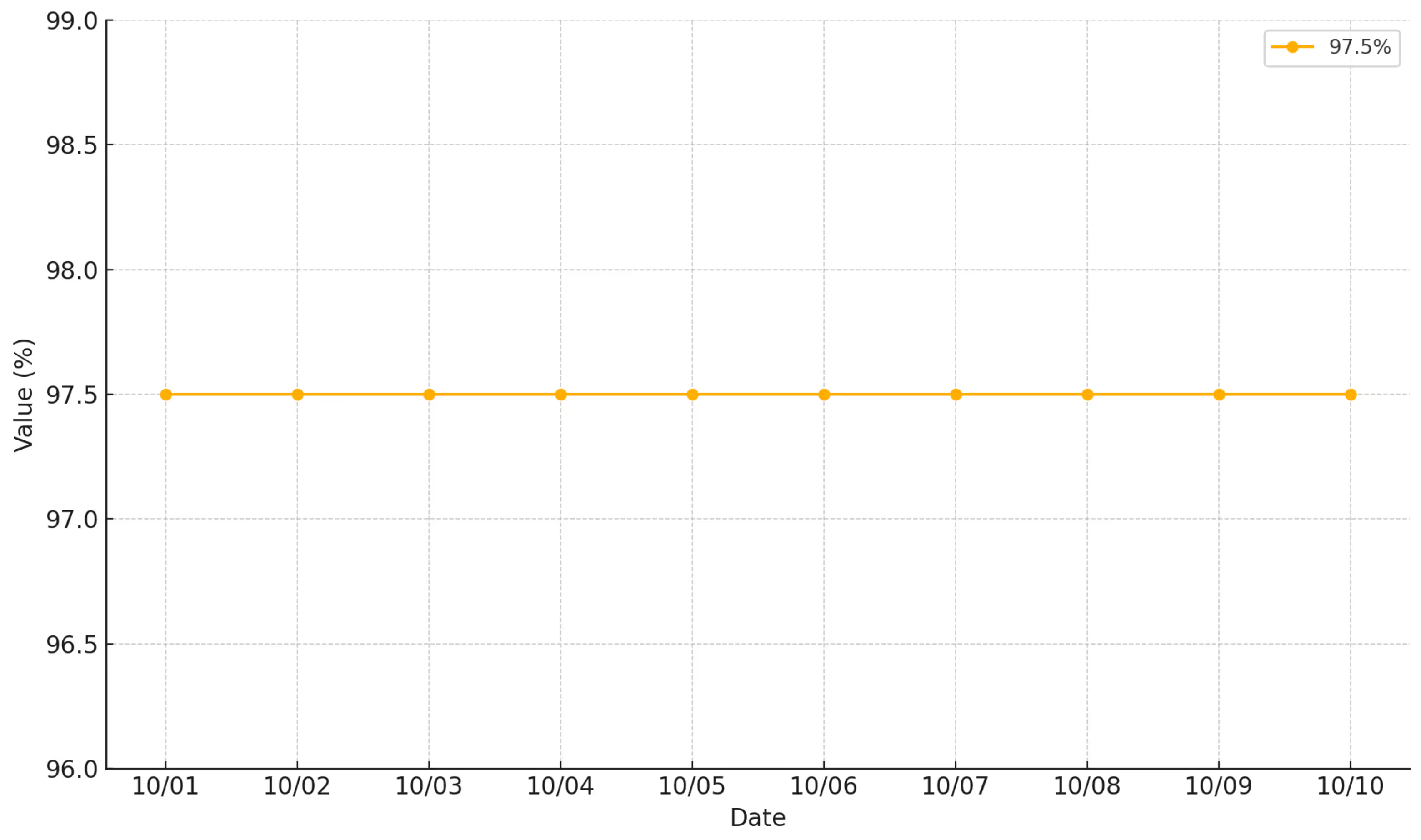

Based on Failed Row-Count

This approach calculates scores based on the proportion of rows affected by failed tests:

Test Score → (total row count - failed row count) / total row count

Quality dimension score → avg. (test score for each test in this dimension)

Example: If 4 tests are run- 3 passed with 1000 rows and 0 failed row count each, 1 test failed with 100 failed rows out of 1000 rows in the table.

- The score for a successful test would be --> (total row count - failed row count) / total row count --> (1000 - 0) / 1000 = 1

- The score for the failed test would be --> (total row count - failed row count) / total row count --> (1000 - 100) / 1000 = 0.9

- The quality dimension score would be --> avg. (test score for each test in this dimension) --> (3 * 1 + 1 * 0.9) / 4 = 0.975

Both methods allow teams to aggregate scores by dimension or dataset, providing a clear picture of overall data health and trends over time.

Challenges in the dbt Ecosystem

Implementing health scores within the dbt ecosystem presents unique challenges:

Test Mapping

The sheer variety of dbt tests, spanning multiple packages (e.g., dbt expectations, dbt utils), makes mapping them to quality dimensions complex and time-consuming. To help streamline this process, we created the dbt Test Hub. The hub offers curated documentation, practical use cases, and organizational tips to navigate the diverse landscape of dbt tests. While it simplifies some aspects, the diversity of tests still poses challenges for consistent measurement.

Aggregated Test Results

dbt tests often return aggregated results, making it hard to calculate the row-level impact of failures.

Calculating Data Health Scores from dbt Tests

Elementary automatically calculates quality dimension scores for dbt tests while addressing these two primary challenges: mapping tests to quality dimensions and handling disaggregated test results. Here’s how it works:

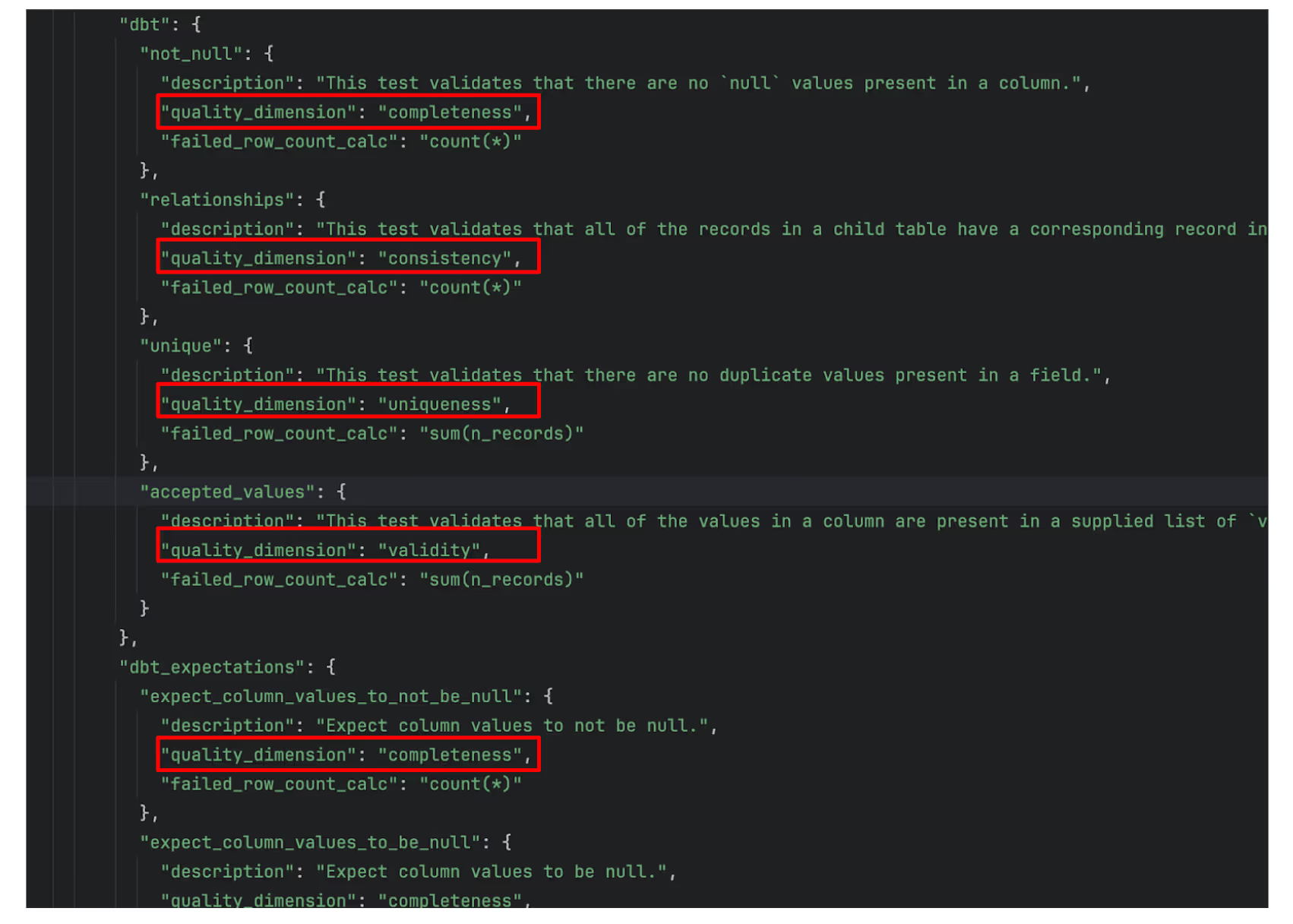

Mapping Tests to Quality Dimensions

Elementary performs the tedious work of mapping commonly used dbt tests (native tests, dbt expectations, dbt utils) to default quality dimensions. This allows for a structured approach to evaluating data health. Here is an example of how Elementary mapped these common tests into their relevant dimensions:

- Accuracy: Validated using tests like

accepted_valuesandexpression_is_true. - Completeness: Evaluated with

not_nullandnot_null_proportiontests. - Consistency: Assessed using

relationshiptests. - Uniqueness: Measured via

uniqueandunique_combination_of_columnstests. - Freshness: Determined using

dbt source freshnessorrecencytests. - Validity: Verified with tests like

expect_column_values_to_be_of_type.

Additionally, Elementary enables users to configure or override these mappings dynamically. For example:

Custom SQL (singular) tests can have their quality dimensions assigned using a meta field, ensuring flexibility and adaptability for unique project requirements.

Capturing Accurate Test Results

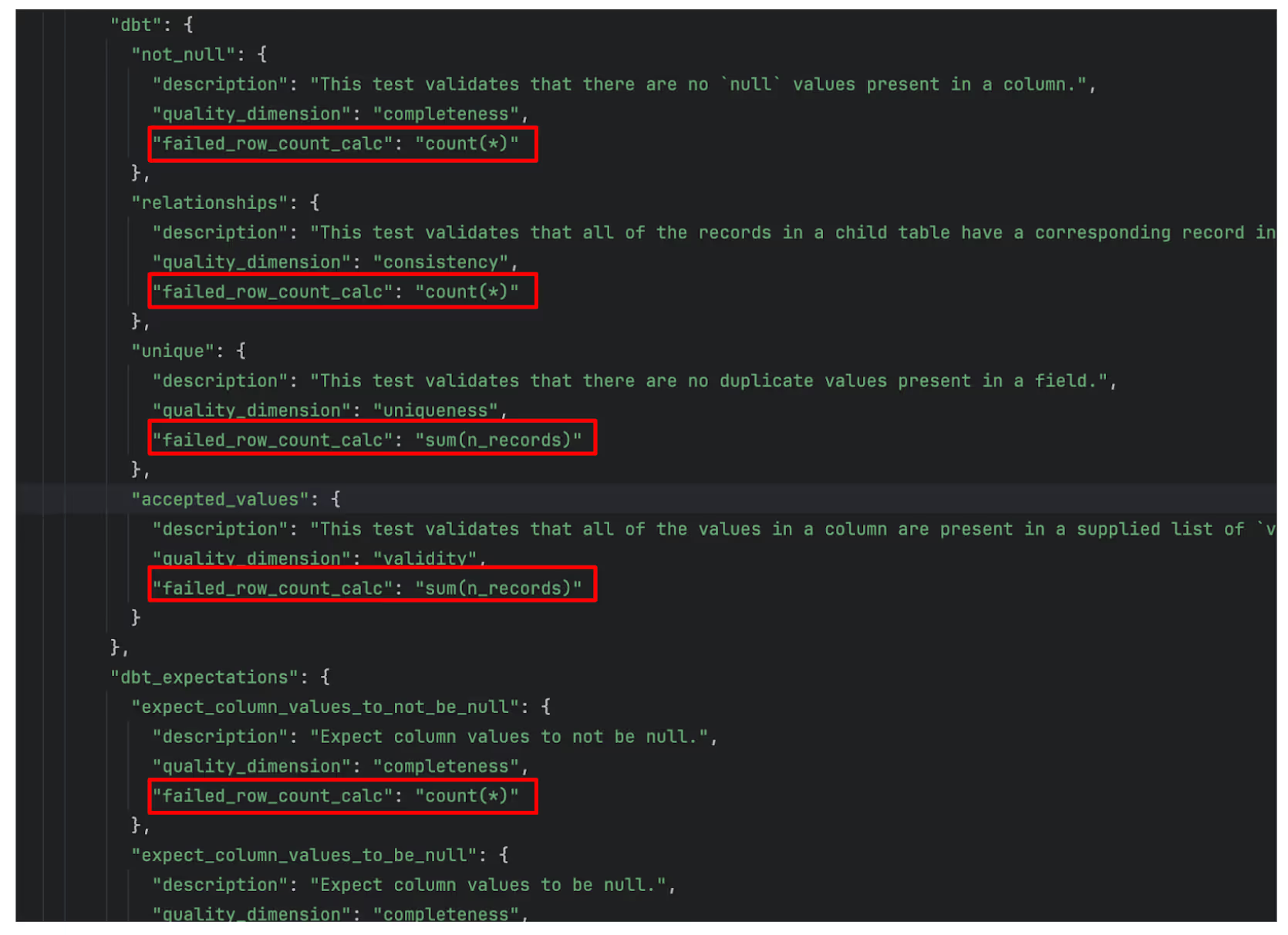

dbt test outputs are typically aggregated, which makes it difficult to measure failed row count. For example, a unique test might fail due to 1,200 duplicate rows, but dbt will return the result as a single aggregated failed result with the non unique value and the number of duplicated records in a column . Elementary solves this problem by:

- Overriding dbt Test Materialization: Elementary wraps test queries to capture the actual number of failed rows rather than just the aggregate result. This involves:

- Wrapping test queries with a summation logic that fits each dbt test’s logic to calculate the total failed row count before returning the result, as shown below.

- Enriching the Elementary Artifact Table: The test results stored in Elementary’s artifact table include:

- Aggregated failure counts (as returned by dbt).

- The precise number of failed rows, enabling more accurate calculation of quality dimensions.

Integration and Usability

Elementary seamlessly integrates with dbt’s natural workflow, ensuring that the enhanced data health calculations are fully compatible with existing pipelines. Users can access this enriched data through Elementary’s dashboards and leverage it to derive precise and actionable insights.

By combining automated test mapping and accurate calculation of the actual number of failed row count, Elementary makes it easier to calculate accurate, actionable data health scores in the dbt ecosystem.

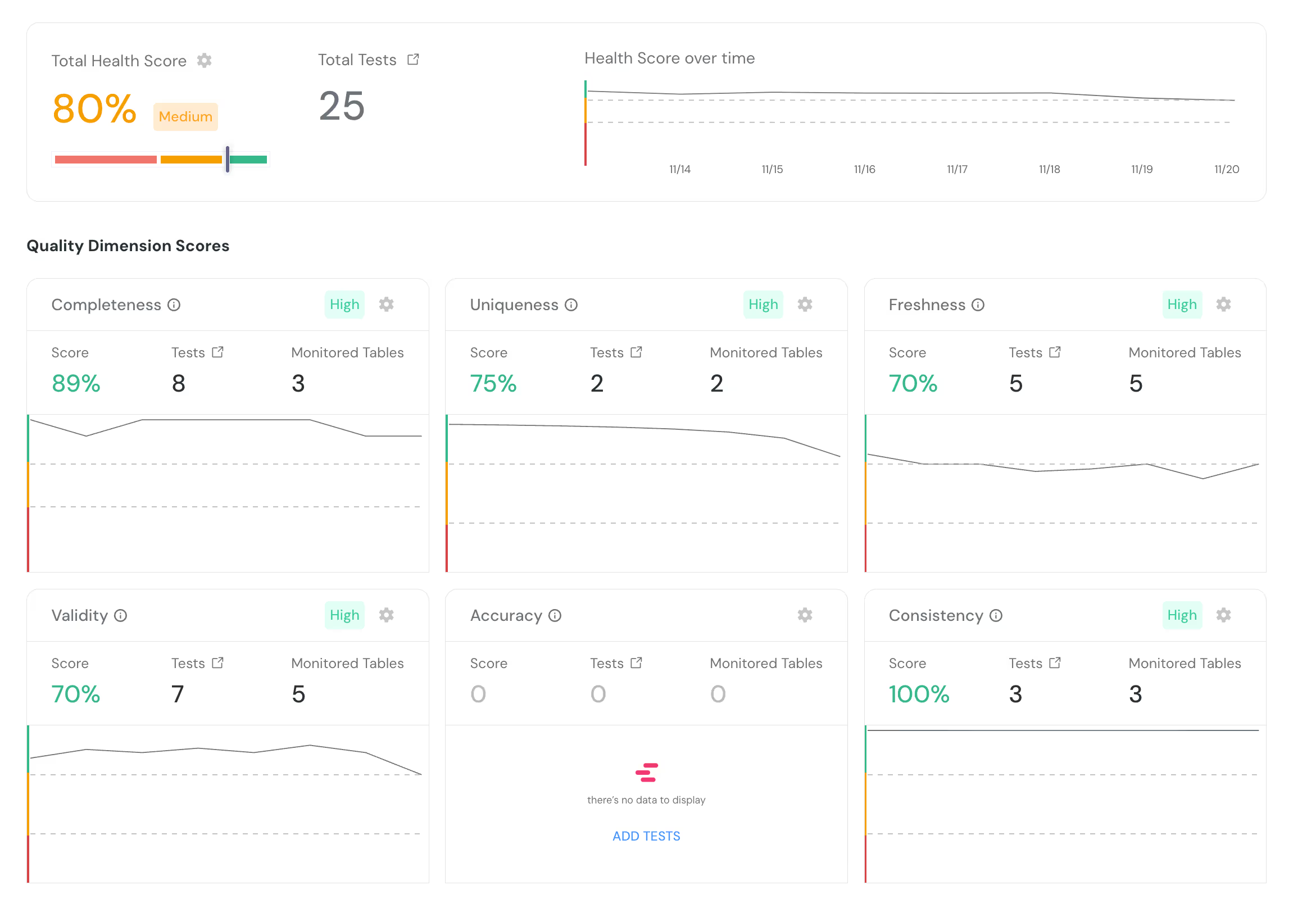

The Data Health Dashboard

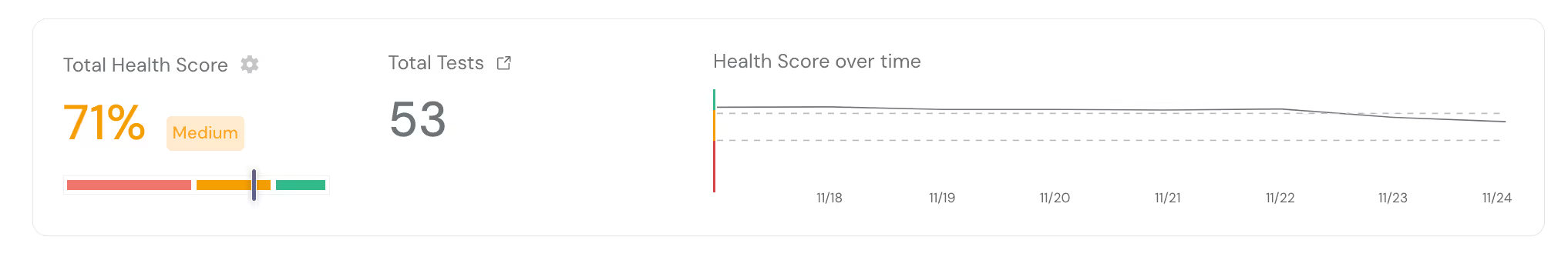

The Data Health Dashboard is a powerful tool designed to provide comprehensive insights into the quality of your data. It calculates and visualizes:

- Total Health Scores: An overall score representing the health of your data assets.

- Quality Dimension Scores: Individual scores for key dimensions, including completeness, uniqueness, freshness, validity, accuracy, and consistency.

Here are some of the key features:

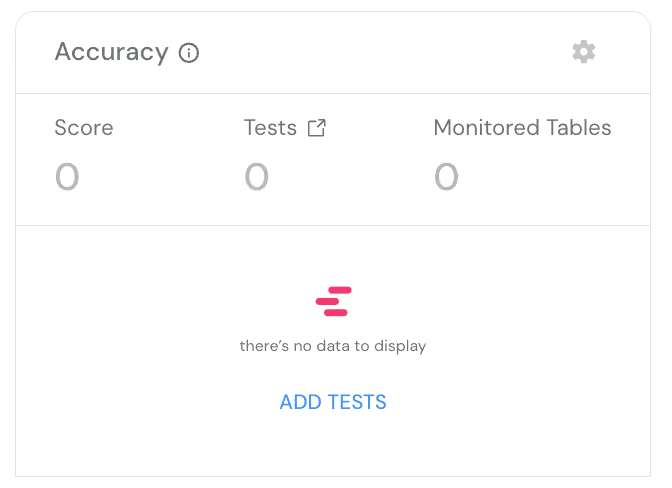

Coverage Gap Identification

If no tests are detected for a specific quality dimension, the dashboard highlights this as a coverage gap and recommends adding tests to gain visibility into that dimension.

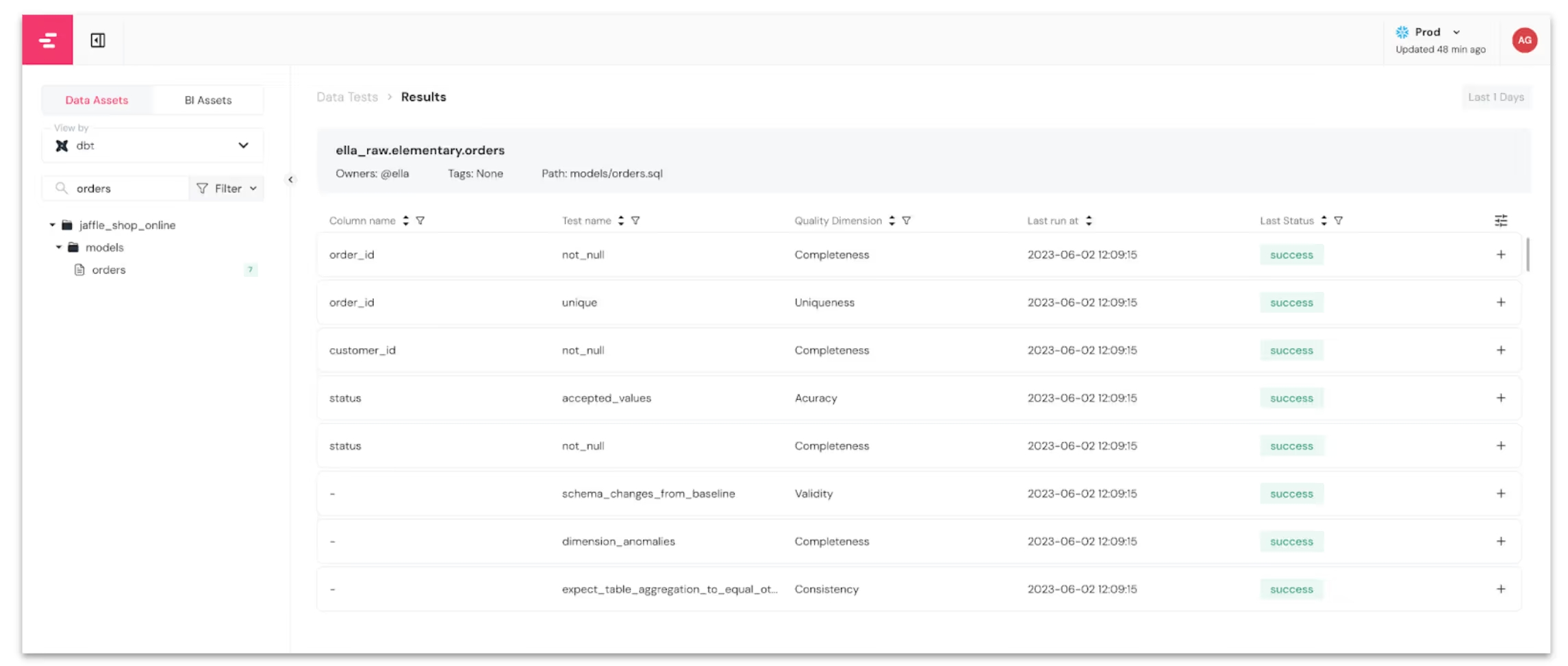

Quality Dimension Mappings

From the quality dimension scores, users can navigate directly into test results to investigate and understand how specific test outcomes contribute to the overall and dimension-level health scores.

A dedicated column in the dashboard displays the mapping of different dbt tests into corresponding quality dimensions, making it easy to track and manage test coverage.

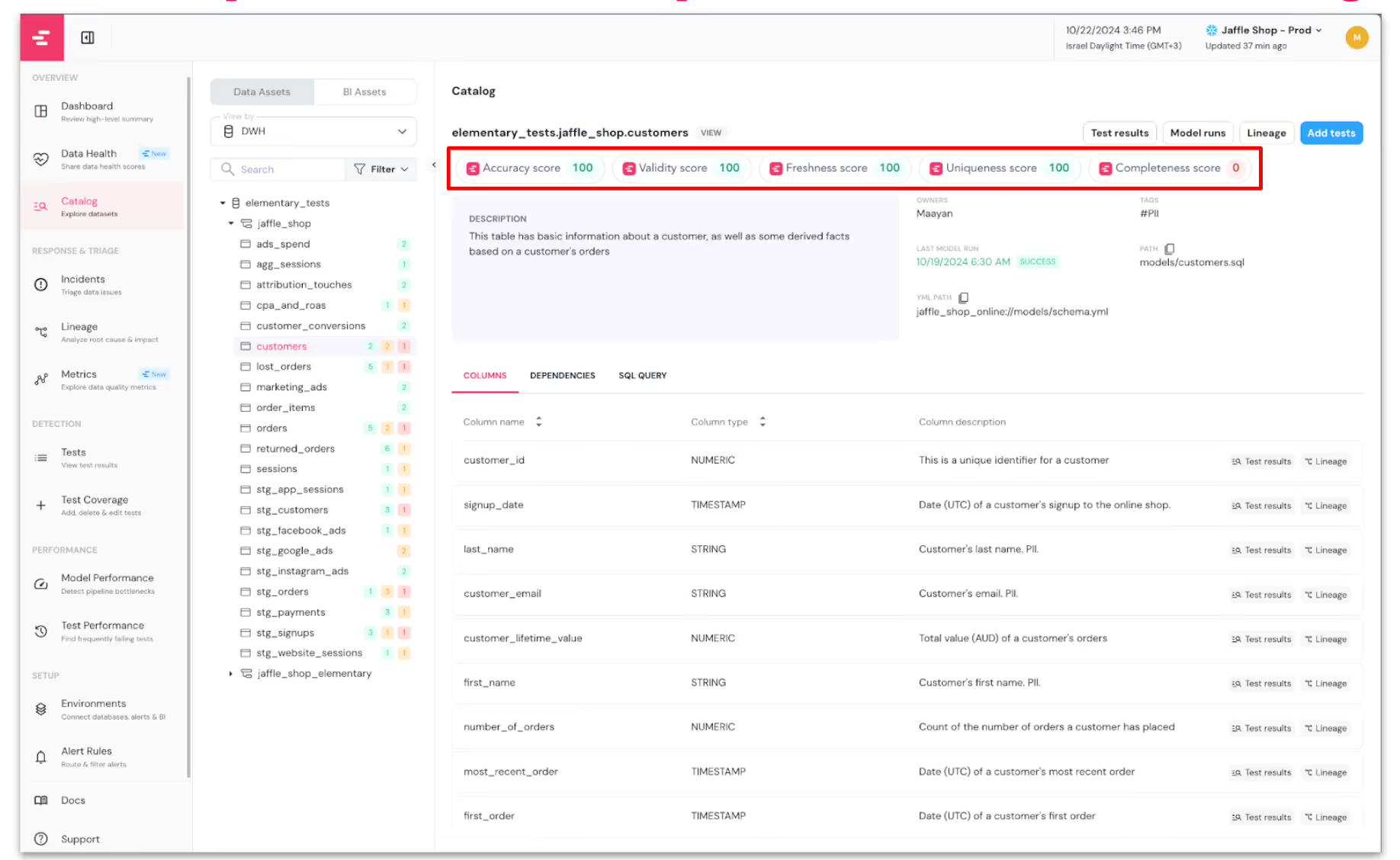

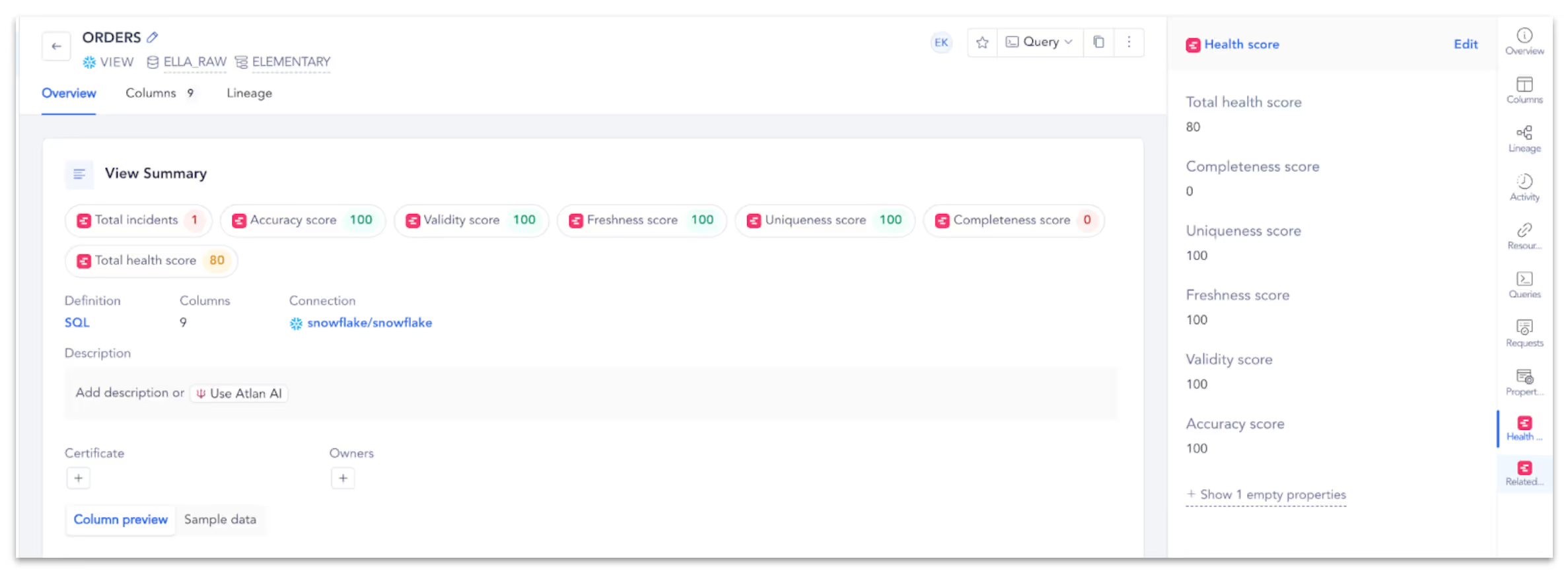

Native and External Catalog Integration

Elementary Cloud’s Native Catalog: An enriched alternative to dbt docs, featuring all Elementary test results, monitors, and quality dimension scores.

Integration with External Catalogs: Elementary integrates with tools like Atlan, embedding quality scores (overall and per dimension) into external catalog assets. This ensures data analysts can assess data trustworthiness directly in their workflow.

BI Tool Enrichment (Coming Soon)

Quality dimension scores will soon integrate with popular BI tools, allowing analysts to include these metrics in dashboards, empowering end-users to trust the data they consume.

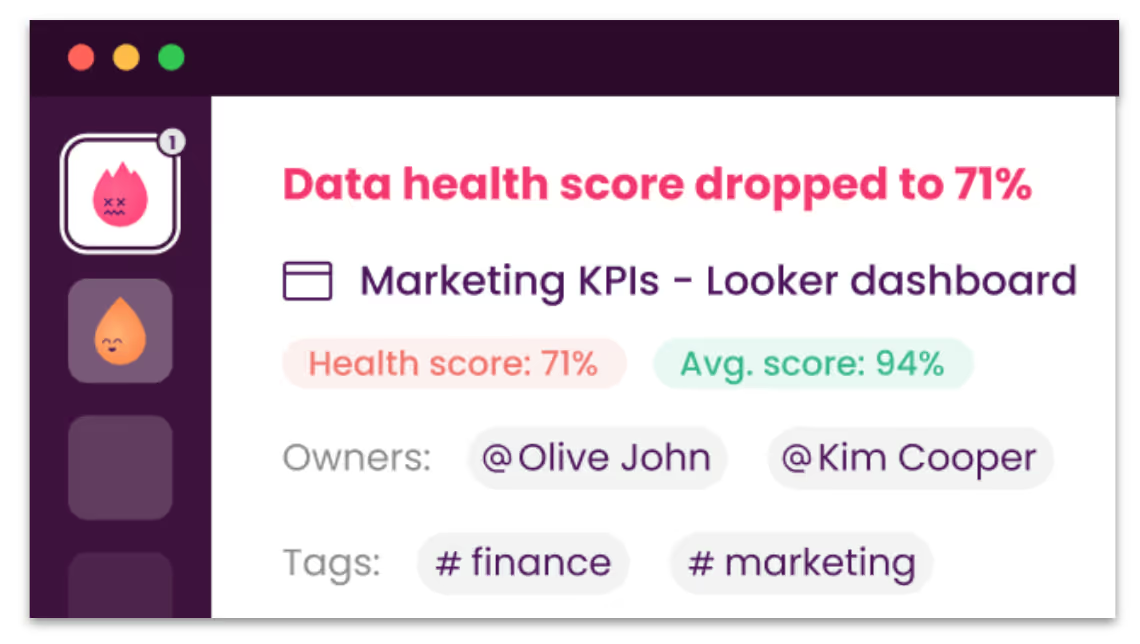

Alerts and Anomalies

- Alerts notify users of drops or anomalies in quality dimension scores.

- Summaries provide an overview of recent quality scores for data domains and products.

Domain-Level Insights

Users can assign tags to monitored data assets to group them by business domains (e.g., "sales"). The dashboard provides domain-specific insights, helping teams understand the quality status of critical data assets.

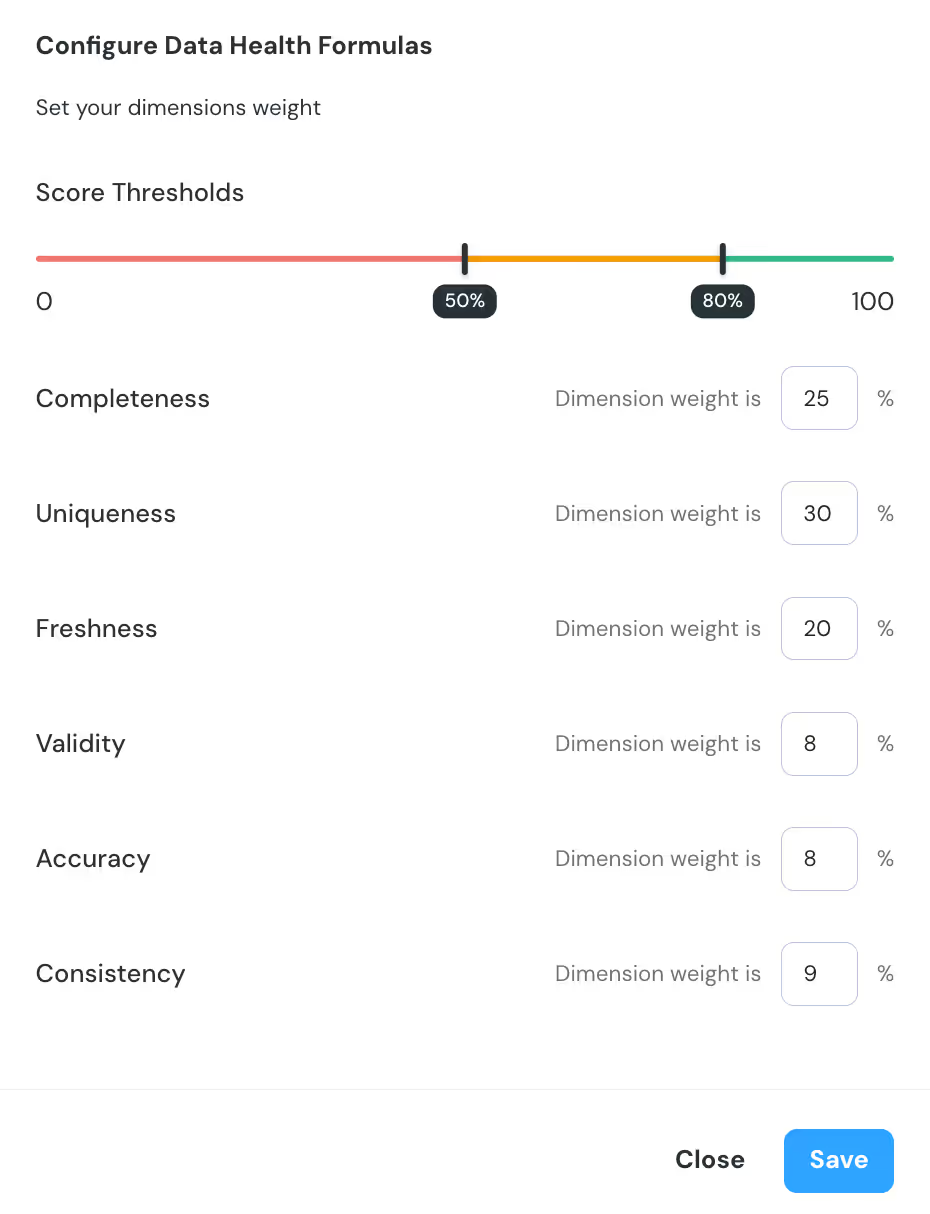

Customizable Thresholds and Weights

Configure thresholds for both total and dimension-level scores to classify data health as "fine," "warning," or "critical."

Assign weights to quality dimensions to customize the calculation of the total health score based on organizational priorities.

Trends Over Time

The dashboard tracks historical trends for both the total health score and individual quality dimensions, helping teams monitor improvements or emerging issues over time.

Start Measuring Data Health

Measuring data health is no longer optional—it’s essential for building trust in data. By adopting frameworks like quality dimensions and leveraging tools like Elementary, teams can move beyond ad hoc testing to a structured, transparent approach to data quality.

Start your journey with Elementary’s open-source tool or explore the comprehensive capabilities of Elementary Cloud.

.svg)