The new unstructured data validation monitors are available as part of Elementary OSS 0.18.0.

The Rise of Unstructured Data and Why It Matters

As LLMs and Gen AI initiatives grow, data teams are increasingly building pipelines to ingest unstructured data. But these pipelines are only as valuable as the quality of the data they process—if the data is incorrect, so are the insights and models built on top of it.

When unstructured data is ingested, it’s often stored as a long string in a data warehouse or lake—a black box that traditionally bypasses the quality checks applied to structured fields. This means critical information can flow through pipelines unchecked before further processing. But as more analytics and AI models depend on these unstructured fields, it’s time to bring data quality and monitoring into the picture.

AI-Powered Data Tests - Unstructured data requires “unstructured” tests

In order to test unstructured data, we needed to create “unstructured” tests.

The goal was to facilitate flexibility that better aligns with the nature of these data sets. This means Elementary users can now apply data validations in plain English using LLMs. Your tests can now do whatever you can describe in simple language, eliminating the need to code strict rules or configure parameters.

Here is an example:

models:

- name: crm

description: "A table containing contract details."

columns:

- name: contract_date

description: "The date when the contract was signed."

tests:

- elementary.ai_data_validation:

expectation_prompt: "There should be no contract date in the future"

Elementary's test `elementary.ai_data_validation` lets you validate any data column using AI and LLM models.

Why this is useful?

- Simplifies development – Just describe your validation, and the test handles the rest.

- Understands context – Leverages semantic awareness to enable logical, context-aware validations.

- Handles complex rules – Ideal for validations that are difficult to express using SQL.

Behind the scenes, Elementary leverages the built-in AI and LLM functions of the data warehouses. See our comprehensive guides for each data warehouse -

Is this secure? Yes!

The implementation leverages the LLM models provided by your data warehouse, hence no data leaves your environment to third-party AI vendors. Your data is validated using the AI functionality of the platform which host it.

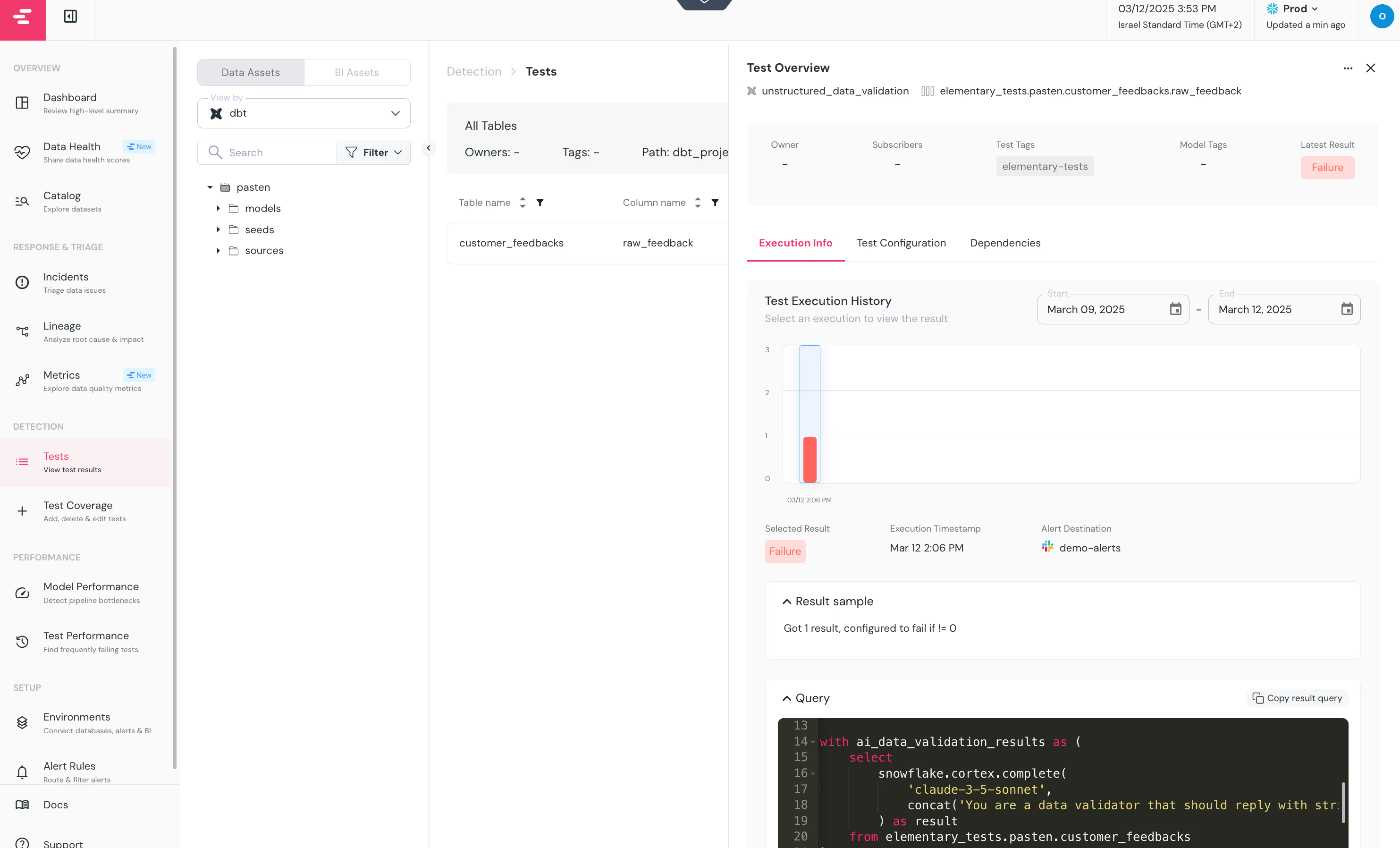

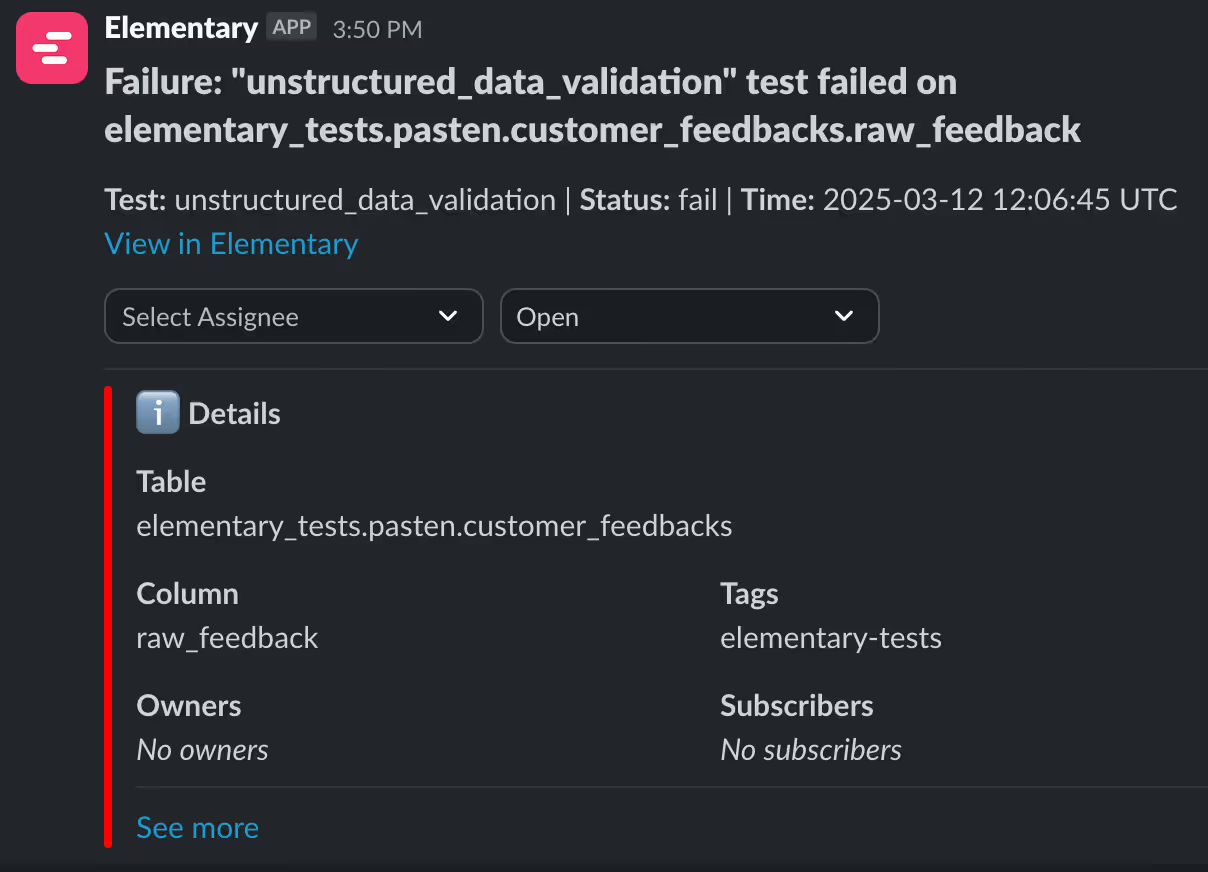

Monitoring Unstructured Data

LLMs excel at evaluating and understanding long-form text, making them the perfect backbone for unstructured data validation.

Below is an example of how you can use Elementary’s unstructured data validation:

models:

- name: customer_feedback

description: "A table containing customer feedback."

columns:

- name: feature_requests

description: "A column containing feedbacks with feature requests."

tests:

- elementary.unstructured_data_validation:

expectation_prompt: "The customer feedback has to contain at least one feature request"

This test ensures that every entry in the `feature_requests` column actually contains a feature request. If any value does not meet this expectation, the test will fail and flag the mismatched values.

Usage examples

Here are some powerful ways you can apply unstructured data validations:

Validating structure

Test fails if: A doctor’s note does not specify a time period or lacks recommendations for the patient.

Validating sentiment

models:

- name: customer_feedback

description: "A table containing customer feedback."

columns:

- name: negative_feedbacks

description: "A column containing negative feedbacks about our product."

tests:

- elementary.unstructured_data_validation:

expectation_prompt: "The customer feedback's sentiment has to be negative"

Test fails if: Any feedback in negative_feedbacks is not actually negative.

Validating similarities (coming soon)

models:

- name: summerized_pdfs

description: "A table containing a summary of our ingested PDFs."

columns:

- name: pdf_summary

description: "A column containing the main PDF's content summary."

tests:

- elementary.validate_similarity:

to: ref('pdf_source_table')

column: pdf_content

match_by: pdf_nameTest fails if: A PDF summary does not accurately represent the original PDF’s content. The validation will use the pdf name as the key to match a summary from the pdf_summary table to the pdf_content in the pdf_source_table.

models:

- name: jobs

columns:

- name: job_title

tests:

- elementary.validate_similarity:

column: job_descriptionTest fails if: The job title does not align with the job description.

Accepted categories (coming soon)

models:

- name: support_tickets

description: "A table containing customer support tickets."

columns:

- name: issue_description

description: "A column containing customer-reported issues."

tests:

- elementary.accepted_categories:

categories: ['billing', 'technical_support', 'account_access', 'other']Test fails if: A support ticket does not fall within the predefined categories.

Accepted entities (coming soon)

models:

- name: news_articles

description: "A table containing news articles."

columns:

- name: article_text

description: "A column containing full article text."

tests:

- elementary.extract_and_validate_entities:

entities:

organization:

required: true

accepted_values: ['Google', 'Amazon', 'Microsoft', 'Apple']

location:

required: false

accepted_values: {{ run_query('select zip_code from locations') }}

This test will automatically extract the given entities from the text and validate their values in the article values. If the test won’t find the required entities it will fail as well.

Compare numeric values (coming soon)

models:

- name: board_meeting_summaries

description: "A table containing board meeting summary texts."

columns:

- name: meeting_notes

description: "A column containing the full summary of the board meeting."

tests:

- elementary.extract_and_validate_numbers:

entities:

revenue:

compare_with: ref('crm_financials')

column: sum(revenue)

required: true

net_profit:

compare_with: ref('crm_financials')

column: sum(net_profit)

customer_count:

compare_with: ref('crm_customers')

column: count(customers)

required: trueThis test will automatically extract the given entities and their numbers and compare them with our structured data CRM numbers. If the test won’t find the required entities or couldn’t find their reported numbers the test will fail as well.

Our Future Plans

Currently, our approach relies on running these tests directly within dbt, leveraging the data warehouse's native capabilities. This provides significant advantages, particularly in keeping data secure and avoiding unnecessary extraction to external models. Also you can validate your structured data and unstructured data using the same workflow and get visibility into it under one single pane of glass.

However, we recognize that some data pipelines ingest unstructured data outside the data warehouse, and we aim to extend our support to those workflows as well.

Looking ahead, we plan to identify repeatable test patterns, automate common validation recipes, and introduce more built-in tests to streamline the process for our users. Additionally, we want to expand beyond text-based unstructured data and explore validation methods for other media types, such as images and videos, based on real-world use cases and community needs. By evolving our capabilities, we aim to make unstructured data validation more accessible, scalable, and adaptable across diverse data environments - similarly to what we did with structured data.

Want to Get Started?

Make sure you're running Elementary dbt package version 0.18.0 or later and check out our docs.

Join the conversation

We invite you to join our Slack community channel: #unstructured-data, to further explore unstructured data usage and monitoring.

.avif)